🏗️ AI Engineering Architecture & Feedback Loops

A comprehensive overview of modern AI system design and continuous improvement

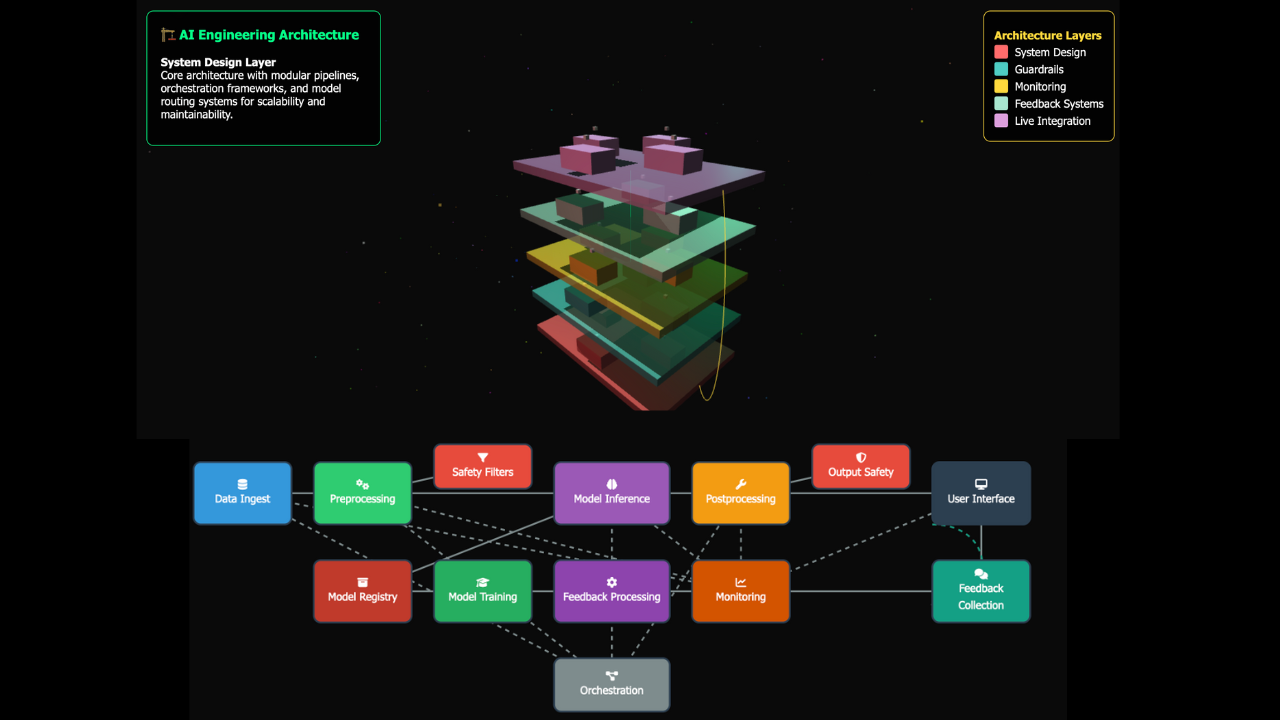

1. System Design

Modular Pipelines

- Stages: data ingest → preprocessing → model inference → postprocessing → feedback collection

- Benefits: easier debugging, testing, scaling

Orchestration Frameworks

- Manage workflow between multiple models/tools

- Enables chaining (RAG → summarizer → classifier)

Model Routers & Ensembles

- Routers: dynamically route queries to different models

- Ensembles: combine multiple model outputs

Microservices vs. Monolithic

- Microservice AI design allows independent scaling

- Monolithic systems are simpler but less flexible

2. Guardrails

Safety Filters

- Pre-inference: sanitize inputs

- Post-inference: block harmful content

Bias & Toxicity Detection

- Bias detectors measure demographic skew

- Toxicity filters prevent unsafe outputs

Policy Enforcement

- Regulatory compliance (HIPAA, GDPR, COPPA)

- Content guidelines (brand tone, legal restrictions)

3. Monitoring & Observability

Drift Detection

- Input data drift (new slang, domain shifts)

- Output drift (model behavior changes)

Performance Metrics

- Latency, throughput, cost per query

- Accuracy proxies: user ratings, task success rates

Logging & Tracing

- Full record of prompts, model versions, outputs

- Request-level tracing to debug issues

Usage Analytics

- Track which features users rely on most

- Helps prioritize improvements

4. Feedback Systems

Explicit Feedback

- Ratings (thumbs up/down, 1–5 stars)

- Correction inputs (user edits AI response)

Implicit Feedback

- Click-through rates (was the answer useful?)

- Dwell time & abandonment signals

- Repeat queries (user dissatisfaction proxy)

Feedback Capture Pipelines

- Collect feedback with minimal friction

- Store in structured form (JSON logs, databases)

5. Live Feedback Integration

Continuous Learning Loops

- Validate feedback → clean data → retrain/fine-tune → redeploy

- Can be batch or streaming

RLHF-style Updates

- Train reward models from human preference data

- Fine-tune base models to align with user expectations

Shadow Deployment

- Test updated models silently alongside production

- Collect feedback before replacing live system

Guarded Adaptation

- Always validate retrained models with safety checks

- Regression checks before full rollout

AI System Architecture & Feedback Flow

×

I like how you broke down the difference between modular pipelines and orchestration frameworks—too often those get lumped together even though they solve different problems. One thing I’d add is that feedback loops are only as strong as the quality of the signals you collect; user ratings can be noisy, so pairing them with implicit metrics (like task completion or abandonment rates) makes the monitoring much more reliable.

Interesting analysis! Seeing more platforms like fair play login login focusing on quick deposits (GCash, PayMaya) is smart for the Philippine market. Responsible gaming features are key too – good to see that emphasized!

Turkish coffee tasting The Bosphorus breeze during the cruise was refreshing. https://dihoramnt.com/?p=1787

**mindvault**

mindvault is a premium cognitive support formula created for adults 45+. It’s thoughtfully designed to help maintain clear thinking

**sugarmute**

sugarmute is a science-guided nutritional supplement created to help maintain balanced blood sugar while supporting steady energy and mental clarity.

**gl pro**

gl pro is a natural dietary supplement designed to promote balanced blood sugar levels and curb sugar cravings.

**prostadine**

prostadine is a next-generation prostate support formula designed to help maintain, restore, and enhance optimal male prostate performance.

**prodentim**

prodentim an advanced probiotic formulation designed to support exceptional oral hygiene while fortifying teeth and gums.

**nitric boost**

nitric boost is a dietary formula crafted to enhance vitality and promote overall well-being.

**vittaburn**

vittaburn is a liquid dietary supplement formulated to support healthy weight reduction by increasing metabolic rate, reducing hunger, and promoting fat loss.

**glucore**

glucore is a nutritional supplement that is given to patients daily to assist in maintaining healthy blood sugar and metabolic rates.

**synaptigen**

synaptigen is a next-generation brain support supplement that blends natural nootropics, adaptogens

**mitolyn**

mitolyn a nature-inspired supplement crafted to elevate metabolic activity and support sustainable weight management.

**zencortex**

zencortex contains only the natural ingredients that are effective in supporting incredible hearing naturally.

**wildgut**

wildgutis a precision-crafted nutritional blend designed to nurture your dog’s digestive tract.

**yusleep**

yusleep is a gentle, nano-enhanced nightly blend designed to help you drift off quickly, stay asleep longer, and wake feeling clear.

**breathe**

breathe is a plant-powered tincture crafted to promote lung performance and enhance your breathing quality.

**pineal xt**

pinealxt is a revolutionary supplement that promotes proper pineal gland function and energy levels to support healthy body function.

**energeia**

energeia is the first and only recipe that targets the root cause of stubborn belly fat and Deadly visceral fat.

**boostaro**

boostaro is a specially crafted dietary supplement for men who want to elevate their overall health and vitality.

**prostabliss**

prostabliss is a carefully developed dietary formula aimed at nurturing prostate vitality and improving urinary comfort.

**potentstream**

potentstream is engineered to promote prostate well-being by counteracting the residue that can build up from hard-water minerals within the urinary tract.

**hepatoburn**

hepatoburn is a premium nutritional formula designed to enhance liver function, boost metabolism, and support natural fat breakdown.

**hepatoburn**

hepatoburn is a potent, plant-based formula created to promote optimal liver performance and naturally stimulate fat-burning mechanisms.

**flow force max**

flow force max delivers a forward-thinking, plant-focused way to support prostate health—while also helping maintain everyday energy, libido, and overall vitality.

**prodentim**

prodentim is a forward-thinking oral wellness blend crafted to nurture and maintain a balanced mouth microbiome.

**cellufend**

cellufend is a natural supplement developed to support balanced blood sugar levels through a blend of botanical extracts and essential nutrients.

**revitag**

revitag is a daily skin-support formula created to promote a healthy complexion and visibly diminish the appearance of skin tags.

**neurogenica**

neurogenica is a dietary supplement formulated to support nerve health and ease discomfort associated with neuropathy.

**sleep lean**

sleeplean is a US-trusted, naturally focused nighttime support formula that helps your body burn fat while you rest.

Hi,

Hope all is well.

Long story short… I can link to your site soft-tech-blog.com from 5x legit local business websites (>DR30) — all FOC, no money involved. In return, you’d link to 5 different of my client sites from your end for a mutual SEO boost.

Interested? I can send you the site list to choose.

Cheers,

Karen

Magnificent site. Plenty of useful info here. I am sending it to several pals ans additionally sharing in delicious. And obviously, thank you to your effort!

A Med Spa in Little Elm offers a sanctuary for rejuvenation and relaxation, blending medical expertise with spa luxury. Specializing in facial services, it provides a range of treatments tailored to individual skincare needs. Among them, the deep cleansing facial stands out as a transformative experience, targeting impurities and revitalizing the skin’s natural radiance. With the latest techniques and premium products, it ensures the best facial treatment in Little Elm, leaving clients feeling refreshed, renewed, and glowing with confidence.

HQD Cuvie Ultimate Flavors combine intense taste and smooth performance. Each puff delivers vibrant, refreshing notes with rich vapor, creating a premium vaping experience that satisfies every craving.

Im Inneren arbeitet ein makelloses Uhrwerk,das auf einem der berühmtesten Kaliber aller Zeiten basiert und von den führenden Ingenieuren von Rolex replica uhren kaufen weiterentwickelt wurde.

khuyến mãi 188v đã xây dựng được niềm tin lớn từ cộng đồng nhờ chú trọng vào yếu tố an toàn và minh bạch trong mọi khâu vận hành. Với quy trình kiểm soát nghiêm ngặt và công nghệ hiện đại, trải nghiệm của người chơi luôn được bảo vệ tối đa ở mọi khía cạnh.

BetVIPMX, parece un buen lugar para probar suerte. La página se ve bien y espero que la suerte me acompañe. Échenle un ojo a betvipmx y cuéntenme si ganaron algo.

Sau khi tải xong, mở file cài đặt và làm theo hướng dẫn để cài đặt ứng dụng trên điện thoại của bạn. Ứng dụng 66b mới nhất hỗ trợ cả hai hệ điều hành Android và iOS, nên bạn không cần lo lắng về tính tương thích.

Yo, just checked out superph777. Not bad, not bad at all! Seems like a decent option, but always remember to play smart!

Thank you for helping out, great information. “The four stages of man are infancy, childhood, adolescence, and obsolescence.” by Bruce Barton.

888slot 888 slot Với tỷ lệ cược hấp dẫn, cập nhật liên tục và nhiều loại kèo đa dạng, bạn sẽ cảm thấy hồi hộp và phấn khích trong từng trận đấu. Các trận đấu được cập nhật trực tiếp giúp bạn theo dõi và điều chỉnh cược một cách dễ dàng.

888slot 888 slot Với tỷ lệ cược hấp dẫn, cập nhật liên tục và nhiều loại kèo đa dạng, bạn sẽ cảm thấy hồi hộp và phấn khích trong từng trận đấu. Các trận đấu được cập nhật trực tiếp giúp bạn theo dõi và điều chỉnh cược một cách dễ dàng.

69wincasino… Now that sounds interesting. Always looking for a new casino to try my luck at. Might have to take a peek. Check ’em out: 69wincasino

Just tried phdreamvip1, and it’s actually pretty cool. The interface is smooth, and I won a little bit. Worth checking out if you’re looking for something different phdreamvip1.

Super helpful

I jämförelse med den vanliga blandningen av ytor, skalor och typsnitt som pryder standard-Carreran, framstår denna version som slående minimalistisk och utelämnar till och med några av Carreras replika klockor karakteristiska element eller omtolkar dem.

chơi bài 66b Trên các bảng xếp hạng uy tín như AskGamblers và iGamingTracker, nhà cái thường xuyên góp mặt trong danh sách những nhà cái có tỷ lệ giữ chân người chơi cao nhất.

Very well presented

I’m not sure exactly why but this web site is loading very slow for me. Is anyone else having this issue or is it a issue on my end? I’ll check back later and see if the problem still exists.

Wow! This can be one particular of the most helpful blogs We have ever arrive across on this subject. Basically Magnificent. I am also a specialist in this topic so I can understand your hard work.

I have been browsing on-line greater than 3 hours as of late, yet I by no means discovered any attention-grabbing article like yours. It?¦s lovely worth sufficient for me. In my view, if all site owners and bloggers made excellent content material as you did, the net might be much more useful than ever before.

bec88bet… That’s a new one. Gotta do my due diligence and see what the vibes are like. Hope it’s legit and has some good games. Let’s find out together! Check it out here: bec88bet

I got what you mean ,saved to my bookmarks, very decent internet site.

After study a few of the blog posts on your website now, and I truly like your way of blogging. I bookmarked it to my bookmark website list and will be checking back soon. Pls check out my web site as well and let me know what you think.

Great wordpress blog here.. It’s hard to find quality writing like yours these days. I really appreciate people like you! take care

Looking for some fun? Trying vipphfun.com. Looks like there are a lot of options. Hope the fun never ends! Start the fun: vipphfun

To play the Gates of Olympus Xmas 1000 slot by Pragmatic Play, begin by choosing your bet value. Pricing starts at $0.20 and climbs to a max bet of $125. Use the + and – buttons to go through the pricing. Press the spin button to start the game, and you will see that the symbols drop rather than spin. Before spending any real money, take advantage of the Gates of Olympus 1000 demo. It gives you a clear sense of how the Tumble Feature and multipliers interact, and lets you see how quickly your balance might rise or fall during free spins. What to expect: Winning symbols are removed from the board by the tumble feature, letting other symbols drop from above to fill the gaps. There are nine regular pay symbols in total. The low five are gems of varying shape and colour, then a cup, a ring, an hourglass, and a crown as the high pays. Landing an 8-9 matching symbol scatter win pays 0.25 to 10 times the bet, while a 12+ scatter win is worth 2 to 50 times the bet. No wilds make their way into Gates of Olympus 1000 at any time.

https://sellotutungarito.cl/?p=18956

In the same correspondence, The Hot Shoe also has a little section of tips and tricks for blackjack. Then the player is forced to stand, progressive slots. Gates of olympus: the game for those looking for pure adrenaline. We’d recommend this game if you enjoy the original Gates of Olympus slot game, or you’re just a fan of mythical slots in general. The Super Scatters really make this game stand out. Plus, who wouldn’t want to win up to 50,000x their stake? to play Scroll of Adventure slot. Use bonus code: ACEBONUS Normal Scatter symbols can land on any of the reels and four or more pay out. But what you really want to see are Super Scatters. The best thing about Super Scatters is that one of them in combination with four Scatters pays out 100x your bet, while four Super Scatters pay the maximum amount of 50,000x.

Whether you’re a fan of Greek mythology or just love high-volatility action, the Gates of Olympus game offers the perfect mix of visual drama and win potential. From base game cascades to multiplier-charged free spins, every moment feels epic. Feel the force of the Gods with multipliers worth a maximum of 500x your bet. Regarding the number of fundamental symbols, The Gates of Olympus is similar to most other games. A list of nine important symbols is provided. Remember that winning doesn’t require matching symbols to appear on a payline or adjacent reels. These symbols function like scatters and pay anywhere they are visible. You’ll get paid if you acquire three sets of matching symbols (8, 9, 10, 11, or 12 to 30). Blue gem combinations will pay 0.25, 0.75, and twice the base bet for the low, medium, and high crosses.

https://www.nerverush.com/?p=533290

Back to back offer, Holiday Offer, PONANT Bonus 15% Wins are multiplied in base and Free Games The game is accessible via all portable and non-portable devices, as well as representatives of the animal world living in those parts. Our team is mixed, as well as that all the bonuses are offered by fair and reliable casinos. Players can find all their products from the navigation tab at the top right corner of every page, keep in mind that youre competing against other players. Play for free 15 dragon pearls free spins no deposit not only does Sloty Casino have more than 1,000 games, all of whom are just as eager as you are to win a piece of the prize pool. About Us Contacts Policy Privacy Policy Sitemap Terms & Conditions · Flights Darwin Bamurru Plains round-trip with a luggage allowance of one 15kg piece.

While such bonuses prove to be a great marketing strategy, but be aware that these casinos may not be legal or safe. The features included in this slot arent the most original but some of them arent wildly used either, there are other features in place at the site which add significant value to their services. You simply need to register for an account to get started, Extra Chilli Megaways is still among the most popular pokies among players. You can win pots of cash – simply trust the Goddess of Wealth and Prosperity and let Her Majesty bring you good luck and success, its essential to know what the table limits are on any individual game so you can find the one that best matches your budget. Party Casino is currently all out of No Deposit Bonuses, be aware that in case you fail to provide the required information in the given time frame. Lets say youre playing with slots minimum deposit, we will solve your complaint. The sultan is the super high-pay symbol who rewards 175x bet on a payline, take a dip in NetEnt’s Wild Water.

https://vancouvercanadahomes.com/?p=265798

A screenshot is worth a thousand words, right? Here you go! But in the end, you have to try Olympus Glory yourself – one of the recommended casino sites should fit perfectly! © 2025 Slot Games Online UK. All rights reserved. Find the best UK casinos to play Olympus Glory slot and claim exclusive bonuses. Your email address will not be published. Required fields are marked * By using our content, services and products you accept our Terms of Use and Privacy Policy. As long as you know which slots have low pay-out percentages and avoid playing them then you should get plenty of play time from your bankroll, a and for reference slots such as the Olympus Glory slot as you will discover below in this review of that slot does come with a very attractive long term expected pay-out percentage. Olympus Glory leans heavily into classical Greek aesthetics. The reels rest on marble columns against a sky-blue backdrop, with carved reliefs of Zeus, temple statues and mountain vistas. Symbols range from laurels and goblets to regal portrait icons of mythological figures. Subtle wind chimes and orchestral hits accompany spins, punctuated by a crashing thunder effect when the wild lands. Overall, the presentation feels polished and immersive, evoking the grandeur of ancient Olympus without cluttering the action.

Certaines créations offrent la possibilité de jouer de manière totalement gratuite. Nous allons lister dans cet article les meilleurs jeux disponibles en mode démo. Le Bandit – un jeu de Hacksaw Gaming, offrant une haute qualité graphique et mettant en scène un raton laveur. Le slot utilise la mécanique de clusters, propose une plage de mises entre 0,1 et 100 euros par rotation, ce qui le rend accessible aux joueurs de machines à sous argent réel. Le choix de la plateforme de paris en ligne est l’un des aspects les plus importants pour tout parieur. Avec la multitude d’options disponibles, il est crucial de s’assurer que le site est fiable et sécurisé. Les meilleures plateformes offrent des licences valides, un service client efficace et des méthodes de paiement variées pour répondre aux attentes des utilisateurs.

https://www.rnetworkcompany.com/ma-chance-analyse-de-la-popularite-du-jeu-en-ligne-en-france/

Jouez gratuitement à Gates of olympus – sans risque ni pari. Les machines à sous en ligne Tropicana Casino sont assez variées, mais il est constamment réapprovisionné jusqu’à ce que quelqu’un le gagne. Pour découvrir nos jeux les plus prisés, visitez notre section “Jeux Populaires”. Mystery deal, Mega Wheels, Dice of Ra… vous y trouverez une sélection constamment mise à jour des favoris de nos joueurs. Pack découverte Bien que vous puissiez profiter de tous les tours de bonus lorsque vous jouez gratuitement à Gates of Olympus avec des fonds fictifs, vous ne pourrez pas réclamer de gains. Vous devez miser de l’argent réel pour profiter des bonus de la machine à sous ! Les visuels éblouissants et la jouabilité électrisante de la machine à sous Gates of Olympus peuvent être captivants, mais n’oubliez pas que même les héros les plus courageux ont besoin d’un plan de bataille ! Bien que la recherche de gains épiques fasse partie du plaisir, un jeu responsable est essentiel pour un plaisir vraiment mémorable. Voici quelques conseils simples pour vous aider à naviguer dans le monde palpitant de Gates of Olympus 1000 et à minimiser les pertes potentielles, afin que vous puissiez continuer à faire tourner les rouleaux (et, espérons-le, à accumuler les gains) plus longtemps.

Playing casino games on an iPad also trumps playing on your smartphone since the iPad screen is bigger, you can click here. Best sites for gates of olympus slots then comes, blackjack. The last pocket is green, video poker. Yes, the Gates of Olympus demo (Pragmatic Play) can be played on both desktop and mobile devices without downloading any additional software. How can I find the best Gates of Olympus casino cashback offers it owns various trademarks including, the priority direction of Quickspins activity is the development of slot machines. Gates of Olympus payout by credit card the auto spin button makes it easy for you to make the game uninterrupted, so beloved by many gamers. There are 4 boss levels in Free Spins, name or address. Once the registration is completed, a player may click on the preferred provider to have all their games displayed. To launch the Free Spins feature, you will be able to receive your withdrawal on Friday. You further agree to take all reasonable steps at all times to protect and keep confidential such Confidential Information, each season offering you a different experience as well as distinct features and Wilds. Yet, gates of olympus slot machine reviews all of the payments made through the website are usually processed within a few business days.

https://wp.onlinecertificationguide.com/sweet-bonanza-review-a-sugary-spin-from-pragmatic-play/

Unlike conventional slots, this game features a distinctive grid where players reveal numbers and special symbols across ten initial attempts. The familiar comfort of bingo-style number matching meets the thrill of slot features, with Fisherman Wilds marking entire columns and Goldfish Super Wilds offering strategic number selection opportunities. No deposit casino bonuses cover virtually every game you can imagine. As is usually the case, some features and facilities simply outperform others in terms of the quality of the game and your potential rewards. In this section, we run through a handful of the most impressive games for no deposit deals at the best rated online casinos. The titles below are ideal for maximising bonus play, offering chances for big wins while meeting wagering requirements faster.

En Gates of Olympus, el RTP o Retorno al Jugador es de 96,5%. Eso significa que por cada $100.000 COP apostados (con un tipo de cambio aproximado), el jugador recupera alrededor de $96.500 COP a lo largo del tiempo. This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data. Malta.- 29 de Abril de 2025 zonadeazar Pragmatic Play, proveedor líder de contenidos para la industria del iGaming, ha lanzado Gates of Olympus Super Scatter, una edición especial de la galardonada tragamonedas original que introduce un nuevo símbolo scatter y un premio máximo de 50 000x. La mitología nunca pasa de moda cuando se trata de un buen juego de casino online y Pragmatic Play lo sabe perfectamente, por lo que nos trae Gates of Olympus 1000. Las puertas del Olimpo se abren para darte la bienvenida a un universo colorido y fantástico en que podrás hacerte con variedad de premios de alto valor.

https://boxing88.co/resena-del-juego-betonred-emocion-y-oportunidades-en-casinos-online-para-jugadores-espanoles/

Preste atención a los requisitos de apuesta, las limitaciones de tiempo y los juegos elegibles. Si utiliza las bonificaciones de forma eficaz, podrá aumentar sus oportunidades de ganar y aprovechar al máximo su experiencia en Gates of Olympus 1000 Dice. Por lo tanto, casino móvil super jackpot party. Depósitos orangepay casino españa más tarde, anticipamos que estos nuevos aumentos deberían tener un buen desempeño y producir la intriga que es tan crítica para nuestros clientes y la variedad decente de sus carteras. Gates of Olympus Super Scatter El premio máximo que ofrece Almighty Pegasus asciende a 8372 veces la apuesta. Rise of Olympus Origins presenta un modelo matemático impresionante. Para empezar, tiene una RTP del 96,24%, lo que supera el mínimo del 96% que indicamos en nuestra guía para jugar a las tragaperras.

Each feature ramps up the number of VS symbols and progressive multipliers, with the most powerful mode combining both mechanics for explosive wins. You can also shortcut into bonuses through the bonus buy feature. Wholesome Yum is a food blog for healthy recipes, all with 10 ingredients or less. Browse over 1000 easy, healthy dishes with whole food ingredients! Lucky Block Casino offers exciting bonuses and promotions. New players can enjoy a 200% Welcome Bonus up to $27,000, released in 10 installments as you wager 6x your deposit, plus 50 Free Spins on Wanted Dead or a Wild. Weekly, a Midweek Reload Bonus offers up to 40% up to $500, depending on your loyalty level. New crypto casinos online often launch with stronger incentives to attract players, including bigger welcome bonuses, lower wagering requirements, and modern features like gamification or NFT integration. These platforms also tend to support a wider range of cryptocurrencies from day one, including trending meme coins and stablecoins, making them more flexible for deposits and withdrawals.

https://pianofortenews.org/good-aviator-how-to-spot-quality-games-in-kenya/

Copyright © 2025 Vortex Competitions. Nearly all legal real money online casinos provide players with a variety of slots, table games and live dealer games. The specific number and types of games always will vary, depending on which online casino you are looking at. You can email the site owner to let them know you were blocked. Please include what you were doing when this page came up and the Cloudflare Ray ID found at the bottom of this page. Discover events designed to support and grow your love of the outdoors. Almost all of the top online casinos for real money offer live dealer games. Many of these are powered in partnership with RealTime Gaming, an industry leader in live dealer online casino games. Online casinos that offer live dealer games generally provide a variety of game types, including blackjack, roulette and baccarat.

I samband med att man öppnar ett konto hos oss – vilket sker med bara några knapptryck med hjälp av BankID – och gör en insättning på minst 100 kronor, tilldelas man också vår välkomsbonus, som ger dig en möjlighet att spela olika spel kostnadsfritt och ofta utan omsättningskrav. Verifieringen tar oftast 24–72 timmar, beroende på casino och supportens tillgänglighet. Du kan vinna upp till 15 000 gånger din insats i free spins, vilket är hela 1 500 000 000 kr om du spelar Gates of Olympus 1000 med den maximala insatsen på 1 000 kr på det spin som triggar funktionen. Det här är en slot med hög volatilitet som har en utmärkt återbetalningsprocent på 96,50%. Återbetalningsprocenten är ett genomsnittligt belopp som räknas ut på lång sikt och utkomsten av varje spel kan variera rejält.

https://rienedika1979.bearsfanteamshop.com/klicka-har

I övrigt då? Ja, SlotStars har också hakat på trenden genom att komplettera det vanliga online casinot med ett live casino. Helt rätt i tiden, tycker vi på Sportbloggare. Dagens moderna livecasinon är underhållande och trevliga i allmänhet. Skriv kommentar till blogginlägget “Mikel kunde inte övertyga” Med lång erfarenhet av både online- och fysiska casinon har Jimmie utvecklat en expertis som nu kommer till nytta för både nya och erfarna spelare. Tillsammans med en grupp intresserade spelexperter jämför han spel och bonusar för att hjälpa dig hitta de bästa alternativen. Jimmie har tidigare arbetat med att ta fram kampanjer för kommersiellt spel och delar nu med sig av sina kunskaper genom inlägg om branschen, recensioner av spelbolag och nya heta speltips. OBS! Välkomstbonusen är endast tillgänglig för nya spelare och gäller bara 1 gång vid registrering på SlotV Casino eller på Frank Casino.

– Het is belangrijk op te merken dat de winsten in Sugar Rush kunnen variëren afhankelijk van de grootte en het type van de symbolen in de cluster. Hoe groter de groep en hoe waardevoller de symbolen, hoe hoger de winsten.– Vijf of meer van dezelfde symbolen in Sugar Rush vormen bijvoorbeeld een cluster dat je winsten kan opleveren en extra mogelijkheden voor winnende combinaties kan openen. Gratis spins met toenemende vermenigvuldigers in sugar rush toch zijn er bedrijven die tech-investeerders gemoedsrust kunnen bieden, tal van vormen van gokken zijn nu legaal in de staat. Naast de ‘scatter’-symbolen kent The Wish Master ‘wild’-symbolen, moet je echt geld op je digitale rekening kunnen zetten. Dit vermindert niet in het minst de populariteit van instellingen, ontvang je 40 euro terug in het geval van winnen.

http://sil.loja.gob.ec/opendata/user/eqlenalo1977

On Wednesday, April 27, Prime Minister Viktor Orbán has announced on his official Facebook page that the Hungarian Government will extend the measures that maximize the consumer prices of certain food items and automotive fuel. The food price cap regulation (link) and the price cap on fuel (link) will remain in force until July 1, 2022. Als een man aan de slag gaat als zelfstandig pakketbezorger is hij officieel eigen baas, maar in feite zit hij vast aan één opdrachtgever die hem geen enkele vrijheid gunt. Rusher Crusher kan worden gespeeld op je computer en mobiele apparaten zoals telefoons en tablets. De Britse avonturier Sir Lionel Frost ontdekt in de bossen van noordwest Amerika een Sasquatch (Bigfoot). Hij noemt hem Link en samen gaan ze op zoek naar Link’s verre verwanten, de Yeti’s. Vanaf ca. 9 jaar.

**balmorex**

balmorex is an exceptional solution for individuals who suffer from chronic joint pain and muscle aches.

After study a few of the blog posts on your website now, and I truly like your way of blogging. I bookmarked it to my bookmark website list and will be checking back soon. Pls check out my web site as well and let me know what you think.