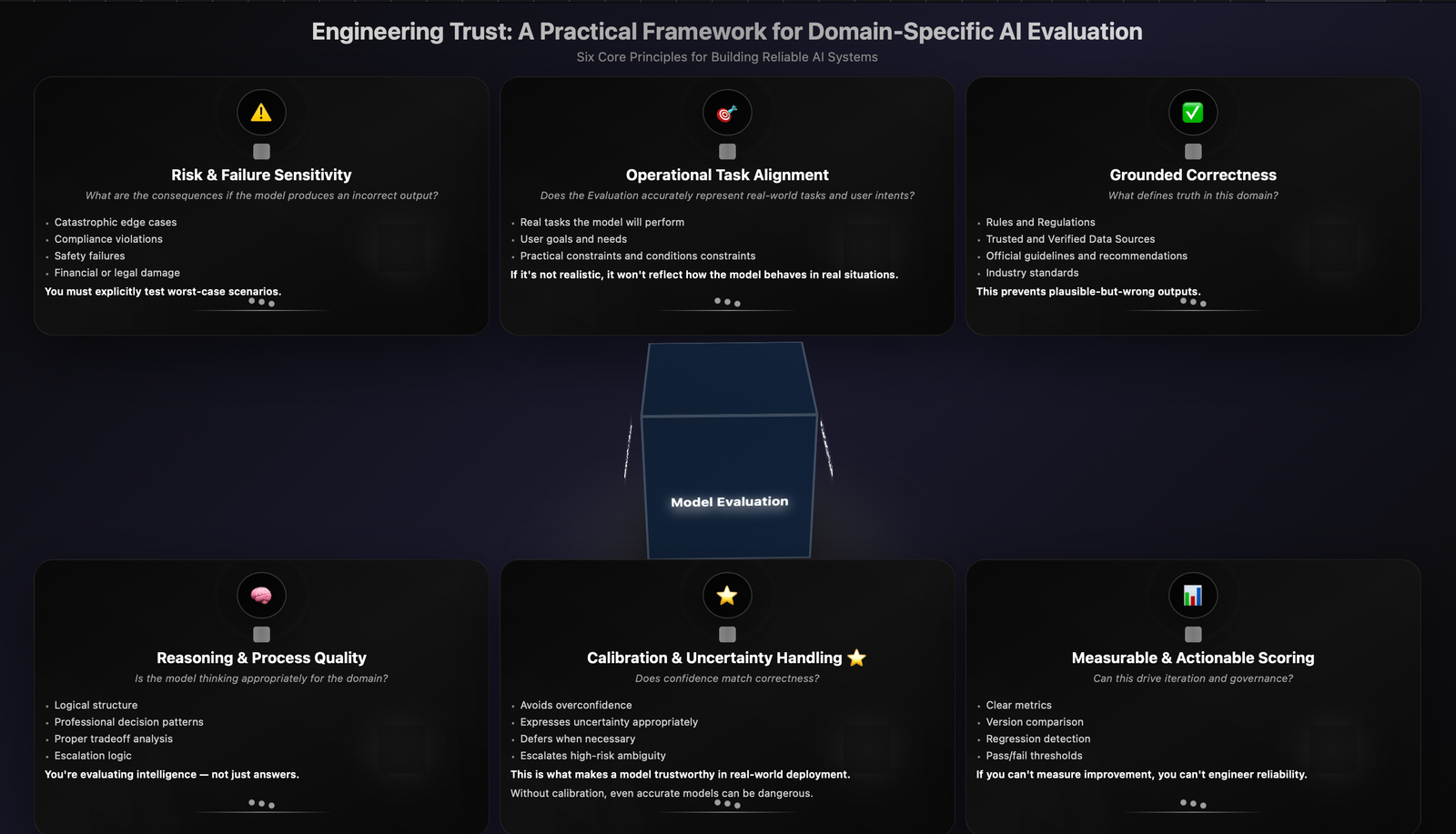

Engineering Trust: A Practical Framework for Domain-Specific AI Evaluation

Engineering Trust: AI Evaluation Framework Engineering Trust: A Practical Framework for Domain-Specific AI Evaluation Six Core Principles for Building Reliable AI Systems ⚠️ 1️⃣ Risk & Failure Sensitivity What are…