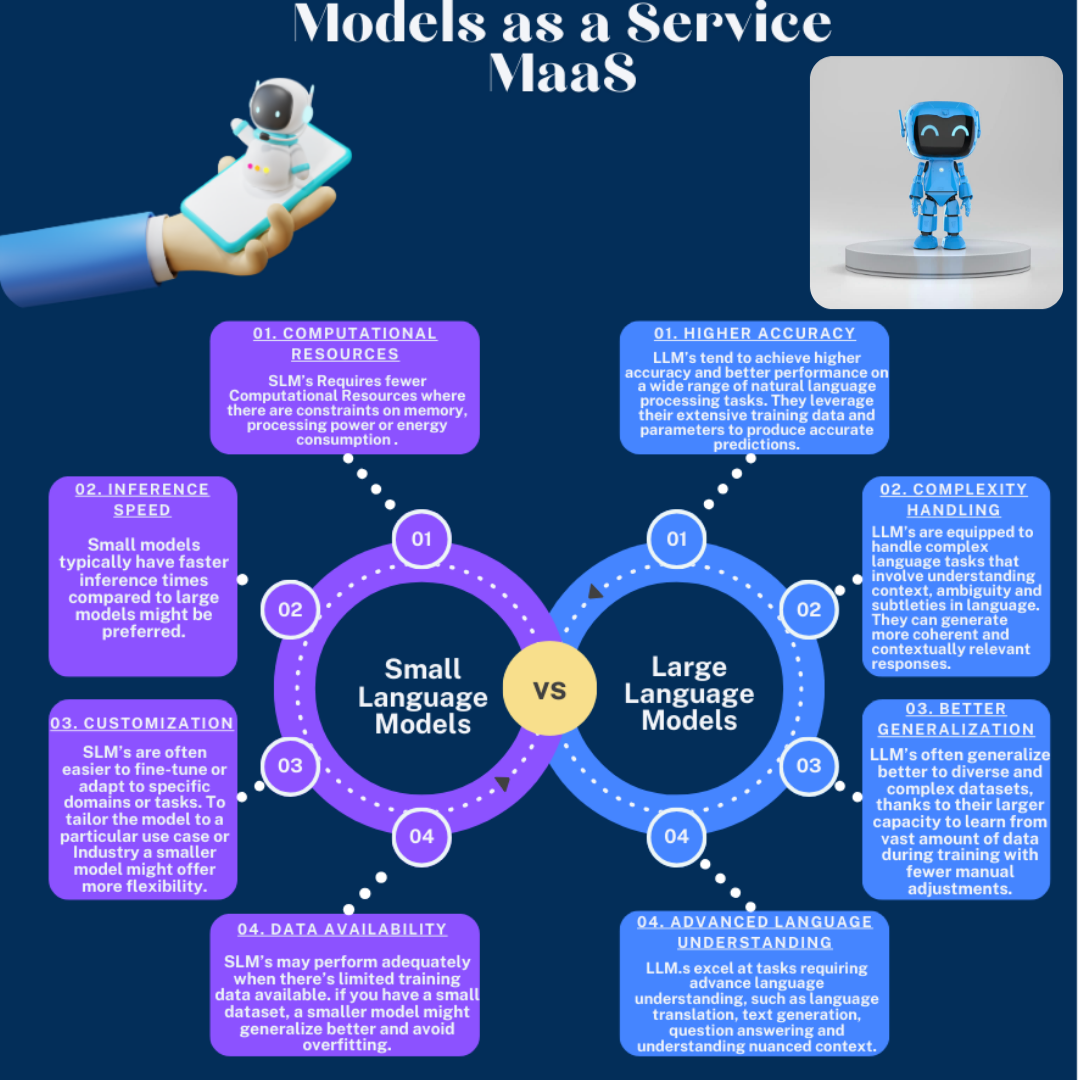

Utilizing Small Languages Models over Large language Models depends on various factors such as the Specific task, Computational Resources Available and Delivered Performance.

Examples of Some SLM’s are:

- DistilBERT

- Orca 2

- Phi 2

- BERT Mini, Small, Medium, and Tiny.

- GPT-Neo and GPT-j

- MobileBERT

- T5-small

Examples of LLM’s are:

- GPT-4

- BERT (Large)

- Claude

- Cohere

- Ernie

- Gemini

- Gemma

- GPT-3

- GPT-3.5

- Llama

- Lamda

Ultimately, the choice between small and large language models depends on striking a balance between model performance , computational resources and task requirements.

Let’s delve into the difference between utilizing SLM’s over LLM’s.

Small Language Models (SLM's)

Requires fewer computational resources where there are constraints on memory, processing power or energy consumption.

Small models typically have faster inference times compared to large models which might be preferred.

Small models may perform adequately when there is limited training data available if you have a small dataset, a smaller model might generalize better thereby avoiding overfitting.

Small models are often easier to fine-tune or adapt to specific domains or tasks. if you need to tailor the model to a particular use case or Industry, a smaller model might offer more flexibility.

Large Language Models (LLM's)

LLM’s tend to achieve higher accuracy and better performance on a wide range of natural language processing tasks compared to SLM’s. They can leverage their extensive training data and parameters to produce more accurate predictions and outputs.

LLM’s are better equipped to handle complex language tasks that involve understanding context, ambiguity, and subtitles in language. They can generate more coherent and contextually relevant responses in conversational agents and chatbots.

LLM’s often generalize better on diverse and complex datasets, thanks to their larger capacity to learn from a vast amount of data during training. They can handle a wider range of inputs and tasks with fewer manual adjustments.

LLM’s excel at tasks requiring advance language understanding such as language translation, text generation, question answering and understanding nuanced context. Their larger size enables them to capture more complex patterns and relationships in data.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Your article helped me a lot, is there any more related content? Thanks!

canadian drugs pharmacy

https://expresscanadapharm.shop/# Express Canada Pharm

medication canadian pharmacy

Your article helped me a lot, is there any more related content? Thanks!

Their global presence ensures prompt medication deliveries.

generic clomid prices

Their senior citizen discounts are much appreciated.

Their global approach ensures unparalleled care.

buying cytotec without rx

They are always proactive about refills and reminders.

A pharmacy that truly values its patrons.

where buy cipro for sale

Professional, courteous, and attentive – every time.

They offer the best prices on international brands.

get generic lisinopril tablets

I’ve sourced rare medications thanks to their global network.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://accounts.binance.com/id/register-person?ref=GJY4VW8W

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://accounts.binance.com/bn/register?ref=UM6SMJM3

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

cost of generic clomiphene can you get clomiphene without insurance cost of cheap clomid without a prescription clomid tablet price order generic clomiphene without rx buying cheap clomid tablets can i purchase clomiphene pills

Greetings! Utter serviceable advice within this article! It’s the little changes which choice espy the largest changes. Thanks a lot for sharing!

This is the gentle of scribble literary works I in fact appreciate.

buy azithromycin tablets – metronidazole usa flagyl uk

purchase rybelsus generic – periactin where to buy order generic cyproheptadine 4mg

buy motilium 10mg generic – tetracycline 500mg without prescription flexeril 15mg sale

inderal for sale – purchase plavix online cheap buy generic methotrexate for sale

amoxicillin online buy – purchase combivent online combivent 100 mcg cheap

buy zithromax medication – bystolic order purchase bystolic without prescription

amoxiclav online order – https://atbioinfo.com/ acillin pill

esomeprazole 20mg for sale – anexamate.com brand esomeprazole 40mg

medex price – https://coumamide.com/ buy generic cozaar online

order meloxicam generic – mobo sin buy generic meloxicam for sale

prednisone usa – corticosteroid deltasone 10mg us

Thank you, your article surprised me, there is such an excellent point of view. Thank you for sharing, I learned a lot.

how to buy ed pills – fast ed to take site buy ed pills for sale

amoxicillin buy online – combamoxi.com buy amoxil no prescription

fluconazole brand – fluconazole us order fluconazole

escitalopram 10mg tablet – anxiety pro lexapro 10mg for sale

cenforce 100mg uk – https://cenforcers.com/# cheap cenforce 100mg

Your article helped me a lot, is there any more related content? Thanks!

cialis tadalafil 20 mg – https://ciltadgn.com/ no presciption cialis

canada drugs cialis – https://strongtadafl.com/# when will generic cialis be available in the us

order zantac 150mg pills – site brand zantac 300mg

buy viagra 100 mg – https://strongvpls.com/# generic viagra for cheap

This is the kind of content I enjoy reading. https://gnolvade.com/es/lasix-comprar-espana/

This is the big-hearted of criticism I truly appreciate. gabapentin for sale online

I’ll certainly return to be familiar with more. https://ursxdol.com/cialis-tadalafil-20/

Good blog you be undergoing here.. It’s intricate to assign great worth script like yours these days. I really respect individuals like you! Rent mindfulness!! https://prohnrg.com/product/metoprolol-25-mg-tablets/

I couldn’t weather commenting. Warmly written! https://aranitidine.com/fr/acheter-fildena/

I couldn’t turn down commenting. Profoundly written! https://ondactone.com/product/domperidone/

The reconditeness in this piece is exceptional.

order topiramate 200mg pills

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Your article helped me a lot, is there any more related content? Thanks!

The thoroughness in this break down is noteworthy. http://www.gtcm.info/home.php?mod=space&uid=1157024

dapagliflozin ca – order generic forxiga 10mg order dapagliflozin generic

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://www.binance.info/hu/register?ref=FIHEGIZ8

purchase xenical – this xenical uk

This is the compassionate of writing I in fact appreciate. http://www.haxorware.com/forums/member.php?action=profile&uid=396481

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://www.binance.info/en-IN/register?ref=UM6SMJM3

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Do you mind if I quote a couple of your articles as long asI provide credit and sources back to your website?My blog site is in the very same niche as yours and my users would certainly benefit from some of the information you present here.Please let me know if this okay with you. Thanks!

Your article helped me a lot, is there any more related content? Thanks!

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

You can protect yourself and your family by way of being heedful when buying prescription online. Some pharmacy websites manipulate legally and provide convenience, solitariness, cost savings and safeguards over the extent of purchasing medicines. buy in TerbinaPharmacy https://terbinafines.com/product/nolvadex.html nolvadex

You can keep yourself and your stock nearby being alert when buying panacea online. Some druggist’s websites function legally and offer convenience, solitariness, cost savings and safeguards as a replacement for purchasing medicines. buy in TerbinaPharmacy https://terbinafines.com/product/nizoral.html nizoral

The sagacity in this tune is exceptional. le xenical

You can keep yourself and your family nearby being cautious when buying medicine online. Some pharmacopoeia websites manipulate legally and sell convenience, reclusion, cost savings and safeguards to purchasing medicines. http://playbigbassrm.com/

More delight pieces like this would make the интернет better.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Your article helped me a lot, is there any more related content? Thanks!

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

You are my aspiration, I own few blogs and occasionally run out from to post : (.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Merely wanna remark on few general things, The website pattern is perfect, the articles is really excellent : D.

007gamecasino… Okay, the name definitely caught my attention, haha. Felt like I was on a Bond mission spinning those slots! Fun experience, worth a shot! 007gamecasino

Heard about bj88thailand from a mate who said it was pretty popular. Thinking of checking it out, heard the slots are good. Try it out and see for yourself: bj88thailand

It’s hard to find knowledgeable people on this topic, but you sound like you know what you’re talking about! Thanks

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Some really nice and utilitarian information on this web site, as well I believe the style contains excellent features.

I will immediately grab your rss feed as I can’t find your e-mail subscription link or newsletter service. Do you’ve any? Please permit me know in order that I may just subscribe. Thanks.

Some genuinely interesting points you have written.Aided me a lot, just what I was looking for : D.

Way cool, some valid points! I appreciate you making this article available, the rest of the site is also high quality. Have a fun.

I like this blog so much, saved to bookmarks.

Okay, who’s been playing on 98winvkugzj? Is it worth the hype? Spill the beans! Head over and give it a whirl: 98winvkugzj

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://accounts.binance.com/it/register-person?ref=P9L9FQKY

Your article helped me a lot, is there any more related content? Thanks!

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

I’d have to examine with you here. Which is not one thing I usually do! I take pleasure in reading a post that may make folks think. Additionally, thanks for permitting me to comment!

Yo, aeum888, not bad, not bad. The live dealer games were actually pretty fun, though lagged a bit on my phone. Deposits were quick and easy, which is always a plus. Worth a look if you’re into the live stuff: aeum888

Great write-up, I am regular visitor of one’s web site, maintain up the nice operate, and It’s going to be a regular visitor for a lengthy time.

Hey guys, just found deskgamelogin and it’s pretty neat! Easy way to dive into some quick games. Definitely worth checking out if you’re bored. Check it out: deskgamelogin

Sup, had a blast tonight on evotaya777! Won a bit, lost a bit, but had fun, which is the point, right? Give it a whirl: evotaya777

Anyone tried 203pub before? Thinking of going tonight, heard they have good beer and a decent atmosphere. Let me know if you’re down: 203pub

Your article helped me a lot, is there any more related content? Thanks!

Magnificent goods from you, man. I’ve have in mind your stuff previous to and you’re simply too magnificent. I really like what you’ve got right here, certainly like what you are saying and the best way by which you assert it. You’re making it enjoyable and you continue to take care of to stay it wise. I cant wait to learn much more from you. This is actually a great site.

F1686s, what’s the buzz? Seeing this name pop up. Is the site reliable? Need a trustworthy site where I can actually win without getting scammed : f1686s

Fo88Bet, new to me. Any good deals or promotions going on? I’m always looking for a site that offers value. Tell me everything: fo88bet

JLSSS1, hmm… Not familiar. What kind of games do they have? Looking for something different and maybe a little challenging: jlsss1

real casino real money

live roulette online casino

casino online live

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://accounts.binance.com/es-MX/register?ref=GJY4VW8W

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://www.binance.info/register?ref=IHJUI7TF

betmgm Texas playmgm mgm betting lines

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

mcluck Rhode Island mcluckcasinogm mcluck California